Requirement coverage analysis is a key aspect in Xray

A requirement may be either covered by one or multiple Tests. In fact, the status of a given requirement goes way further than the basic covered/not covered information: it will take into account your test results.

As soon as you start running your Tests, the individual test execution result may be one of many and be very specific to your use case.

To make your analysis even more complex, you may be using sub-requirements and executing related Tests.

Thus, how do all these factors contribute to the calculation of a requirement status?

Let’s start by detailing the different possible values for the requirement, Test and Test step statuses. In the end, we’ll see how they’ll impact on the calculation of the coverage status of a requirement in some specific version.

Requirement statuses

In Xray, for a given requirement, its coverage status may be:

- OK – All the Tests associated with the Requirement are PASSED

- NOK – At least one Test associated with the Requirement is FAILED

- NOT RUN – At least one Test associated with the Requirement is TODO or ABORTED and there are no Tests with status FAILED

- UNKNOWN – At least one Test associated with the Requirement is UNKNOWN and there are no Tests with status FAILED

- UNCOVERED – The Requirement has no Tests associated

It’s not possible to create custom requirement statuses, as this value is computed as mentioned above.

You can see that in order to calculate a requirement’s coverage status, for some specific system version, we “just” need to take into account the status of the related Tests for that same version. We’ll come back to this later on.

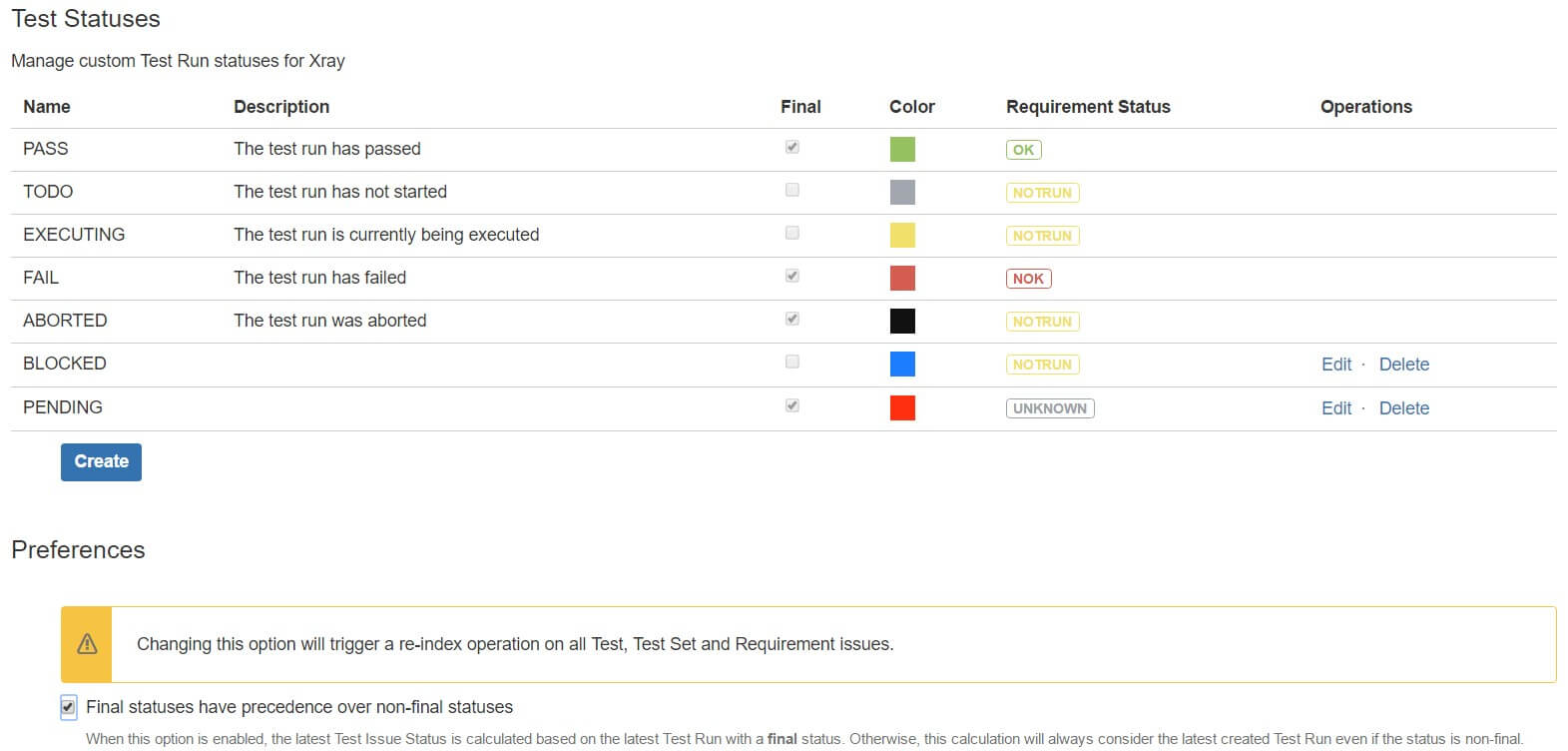

Test statuses

Xray provides some built-in Test Run statuses (which can’t be modified nor deleted):

- TODO – Test is pending execution; this is a non-final status;

- EXECUTING – Test is being executed; this is a non-final status;

- FAIL – Test failed

- ABORTED – Test was aborted

- PASS – Test passed successfully

Each of this status maps to a requirement status, accordingly with the following table.

The status (i.e. result) of a Test Run is an attribute of the Test Run (a “Test Run” is an instance of a Test and is not a JIRA issue) and is the one taken into account to assess the status of the requirement.

On the other hand, a completely different thing is the TestRunStatus custom field, which is a calculated field that belongs to the Test issue and that takes into account several Test Runs; the TestRunStatus does not affect the calculation of the status of requirements.

Managing Test Statuses

Creating new Test Run statuses may be done in the Manage Test Statuses configuration section of Xray.

Whenever creating/editing a Test status, we have to identify the Requirement status we want this Test status to map to.

One important attribute of a Test status is the “final” attribute. If “Final Statuses have precedence over non-final” flag is enabled, then only Test Runs in final statuses are considered for the calculation of the requirement status.

Only final statuses will appear in the TestRunStatus custom field as well.

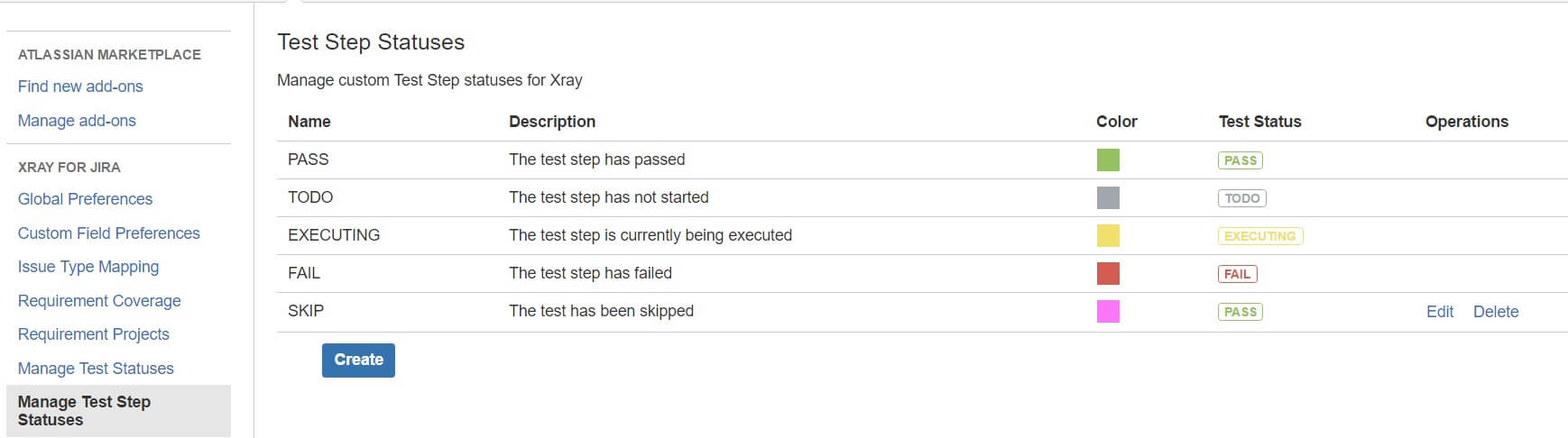

Test Step statuses

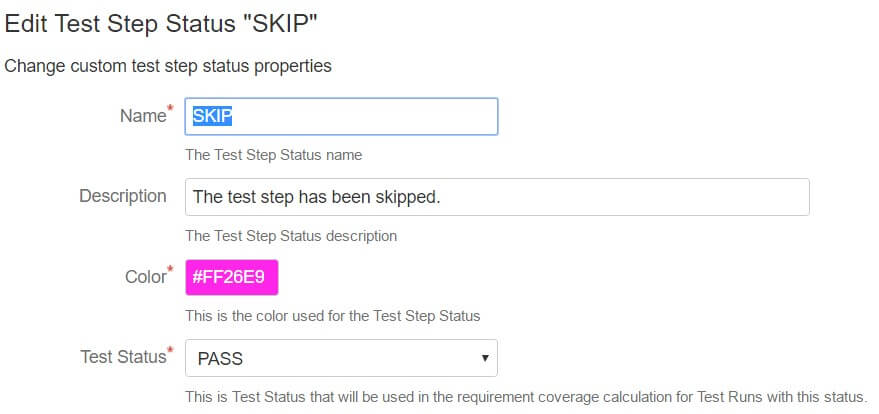

Creating new Test Step statuses may be done in the Manage Test Step Statuses configuration section of Xray.

Whenever creating/editing a Test Step status, we have to identify the Test status we want this step status to map to.

Note that native Test Step statuses can’t be modified nor deleted.

Calculation of the status of a given Requirement

It is possible to calculate the status of a Requirement either by Version or Test Plan.

By Version:

For a given requirement X, in order to calculate the coverage status for version V, we need to evaluate the related Tests statuses that were executed on that same version V.

By Test Plan:

For a given requirement X, in order to calculate the coverage status for Test Plan TP, we need to evaluate the related Tests statuses that were executed on Test Executions associated with Test Plan TP.

The algorithm is:

- Obtain the list of Tests that directly or indirectly through Sub-Requirements (info here) cover the requirement

- This depends on the Requirement Coverage configuration

- Calculate the Test status for all the Tests, in version V or Test Plan TP

- This takes into account Test Runs in version V (as a result of Test Executions in version V) or Test Runs in Test Plan TP (within Test Executions associated with Test Plan TP)

- If a specific Environment is also chosen, then only Test Runs from Test Executions with this Environment will be considered. In case no Environment is specified then all Test Executions are considered. (info here).

- Calculate the overall requirement status accordingly with the rules explained initially in the “Requirement statuses”, aggregating all Test Results.

Note: do not mix up the status of a given requirement with the Requirement Status custom field, which shows the status of a given requirement for a specific version, depending on the configuration under Custom Field Preferences.

In sum…

The status of a requirement in some Version or Test Plan depends on the status of the related Tests. However, this is affected by several factors such as Test Environments and the existence or not of sub-requirements.

Xray flexibility allows customization of the allowed Test and Test Step statuses which in turn will affect the status of requirements.

It works quite well for me

Great to hear that Ruthie. Anything you need from our side, you know where to reach us!

Hello,

I have a question.

I have 2 requirements: ReqA and ReqB

I create 2 subtests-executions and I link same test cases to both, because the test case is the same, but it changes the environment

I execute first test execution, and it fails for ReqA, but automatically ReqB is marked as NOK, but actually ReqB is OK because the test works OK for this specific environment.

From my point of view, as the test run status depends on the “test execution” should not be shared for requirements, because it does not allow re usability.

For this specific situation, I have to duplicate the test cases to avoid falsify of the requirement status.

Do I have another workaround to overpass this situation?

Thanks and regards

Hi Ana,

For this kind of questions, the best procedure is to address it to our support team. They will be fast answering you 🙂

Please you can follow this link and ask for our help there: http://jira.xpand-addons.com/servicedesk/customer/portal/2

Hi,

I have the same question as Ana and I think this a general question and would appreciate an answer here for many to read.

If you do not want to use Fixed Versions because you for example implement continuous delivery, the behaviour described by Ana stops you from re-use of Tests, one of the very central advantages using Xray.

How to make TestRun statuses affect only related Requirements? Not like now, when the status of the *Tests* hits *all* requirements independent of TestRun. Shouldn’t each Test Execution (and its TestRuns) affect only related Requirements?

Regards,

Johan

Hi Ana and Johan,

let me give feedback to your previous questions.

Whenever you report a result to a given Test Run (i.e. a Test in the context of a Test Execution), by default that result will affect the calculation of all the requirements that are being validated by Test.

However, there’s a setting named “Separation of Concerns”, available in the “Requirement Coverage” section of Xray administration settings, that you can use in order to fulfill your needs. If you uncheck it, you can report how it will affect the calculation of the status of all the linked requirements; and you can do it, independently for each requirement.

More info about it here:

https://confluence.xpand-addons.com/display/XRAY/Requirements+Coverage#RequirementsCoverage-SeparationofConcerns

Also, another thing to keep in mind is that Xray supports Test Environments.. so you can also report results and track the results independently for each Test Environment. More info here: https://confluence.xpand-addons.com/display/XRAY/Working+with+Test+Environments

If you’re using CI+CD and using either Jenkins/Bamboo/REST API in order to submit results, you can specify the version under test and also the Test Environment; however you can’t specify how you want each Test Run result to affect the related requirements. The only way to overcome this, is to have different Tests for validating properly and more specificaly each requirement.

If you’re executing a Test linked to multiple requirements and you want to affect the related requirements status calculation, the best would be to decompose your test scenarios further so you have a more clear idea of what is being tested exactly by each Test.

Regards,

Sergio

Hi Sérgio!

Thank you very much for a comprehensive and clarifying answer!

We will try the separation of concern configuration. Otherwise I think we have to consider implement Fixed versions in our CD workflow.

Test environments is great! It works very well for us in our workflow.

Regards,

Johan

This is regarding X-Ray Requirement Status. A story from DB2 DBA Team’s JIRA Project was showing Requirement Status and is set to Uncovered. Db2 DBA team doesn’t use X-Ray as they don’t have any application to test. How can I make this go away. I was told this field is for X-Ray testing status. Appreciate your support and help.

Hello Mana,

This means that you have enabled on your project the “Requirement Coverage Analysis”. To disable the “Requirement Coverage analysis”, you should go to the Project Settings -> Actions (button top of the page) -> Disable Xray Requirement Coverage.

If you have any problem, please raise a support ticket here.

Thank you,

Xray Team

Hi

I have finally managed to configure the requirements to be calculated based on fix version of the requirement story. so correct statuses of same tests across different requirements with separate fix version is taken care of.

Now would just like to know if we can change default value of under test coverage for version which presently defaults to None – Latest Execution to say “First assigned” value so that we can always see the result of requirement and test run in sync with option of user later changing versions for different run statuses.

Thanks

Hi,

In the Administration Settings you can choose which versions are shown on the Requirement Status Custom field installed with Xray and the available options are:

No Version

When this option is enabled, the status of each Test associated with the Requirement is calculated based in the latest TestRun, regardless of the version.

Earliest Unreleased Version

When this option is enabled, only the earliest unreleased Fix Version is displayed in the Custom Field.

Unreleased Versions

When this option is enabled, all unreleased Fix Versions are displayed in the Custom Field.

Assigned Versions

When this option is enabled, all explicitly assigned Fix Versions are displayed in the Custom Field.

To check this configuration you can go to Administration > Add-ons > Xray > Custom Fields.

Please follow this link if you need more: http://jira.xpand-addons.com/servicedesk/customer/portal/2

Best regards,

Xray Team

Thanks for this nice introduction to test statuses. Although I managed to get an overview I’d need to distinguish the test status out of the database. I see the tables that hold the user defined test and test step status, but nowhere to be found the mapping of the default test status like “Todo” “Fail” “OK”.

Are these hard coded values? If so, what value corresponds to what status? Is there a database schema for XRAY you could share for these purposes, because I really need to pull out some serious reports from the test data that your confluence macros are not capable of.

Would be nice to hear from you,

Moritz

Hi Moritz,

We suggest contacting our fantastic support team through our Support Desk to help you with your question. They’ll be able to better understand your needs and help you. 🙂

https://jira.xpand-it.com/servicedesk/customer/portal/2

Best Regards,

Xray Team

Hi Jose

When you change that, you change the “Requirement status” custom field, but what about the “Test Coverage” section? can it be changed so “first assigned” is by default?

Hi Cesar,

Prior to changing the requirement status, you can also change the configurations of Test Run Status and Test Set Status on the same section (Xray – Custom Fields).

The Test Run Status custom fields have the following options:

No Version

When this option is enabled, the status of the Test is calculated based on the latest TestRun independently on the version it was executed.

Earliest Unreleased Version

When this option is enabled, the status of the Test is calculated based on the latest TestRun executed on the earliest unreleased Version.

First Assigned Version

When this option is enabled, the status of the Test is calculated based on the latest TestRun executed on the first Fix Version explicitly assigned to the Test.

Hope this helps!

Best regards,

Team Xray

Hello,

I’ve been trying to set test coverage for my project. There is two issue which I wanted to share with you:

1-) I understand from the term of Test coverage is “How many user story/epic are covered by written test cases”. About coverage, user story and epic are my priority. As a user, I wanted to cover user stories by writing test case. When I connect test case to related user story. So that, I’ll be sure that this percentage of user stories are covered. However, xray test coverage report focused on test executions, not test cases for covering issues. Right? Correct me if I’m wrong.

Last words for this issue, I just wanted to know that I have this number of test case which already covered this number of user story/epic. I don’t touch to test run issue type. How do I perform this?

2-)If first question and demand isn’t possible, there is another question. When test cases connected to user stories, test coverage report focuses on test executions status, not existence of test case and its number. When I have 3 test run (one of them is failed, rest of them is succeed.) within a test case, Test coverage takes failed test run status. However, there are 2 test run which were performed after failed test run after bug fix.

So, actually, I covered that user story, I had a bug but fixed it. But, test coverage takes failed test run into account and report wrong. If test coverage takes the latest test run status into account, report will be correct.

On the other hand, changing old test run status after bug fix for correcting test report isn’t reasonable and true.

Question is that: How to set test coverage for checking the latest test run status?

I hope all issue was clear. I look forward to hear from you.

Hello, my question is about the ‘Abort’ status in a Test Case. Could you explain a little bit deeply the aim of this status. Thank you.

Dear XRay team,

I have set up a small demo project and linked my user stories to tests. Those tests are part of test sets, test plan and test executions just like you showed in your webinars. However, no matter what I do (including the different combinations of versions), the RequirementStatus(es) under the screen of the story always show ‘Uncovered’

Is there any other setting needed ?

Thanks a lot

Uwe

Hi Uwe,

Please reach out to our support team through service desk: https://jira.xpand-it.com/servicedesk/customer/portal/2

They’ll be happy to help you.

Best regards,

Team Xray